In Apple’s own words, NSMapTable is a mutable collection modeled after NSDictionary that provides different options (see also overview from Mike Ash, NSHipster and objc.io). The major option is to have keys and/or values held “weakly” in a manner that entries are removed when one of the objects is reclaimed. This behavior relies on zeroing weak references, which were first introduced with garbage collection, and then later adapted to ARC starting with Mountain Lion 10.8.

Zeroing weak references are magic in that they turn to nil automatically when the object they point to is deallocated. For instance, a zeroing weak reference held by variable foo could have the value 0x60000001bf10, pointing to an instance of NSString. But the instant that object is deallocated, the value of foo changes to 0x000000000000.

The use of zeroing weak references in combination with NSMapTable has the potential to simplify object management by providing automatic removal of unused entries. Unfortunately, NSMapTable does not fulfill all its promises.

The Promises of NSMapTable

Let’s imagine a hypothetical FooNotificationCenter class, that provides the following API:

1 2 3 4 | |

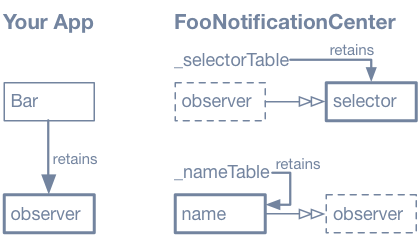

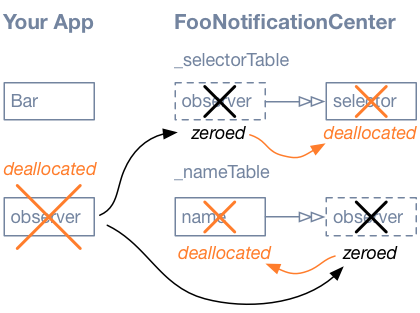

Using two NSMapTable instances, a very simple implementation of FooNotificationCenter could look something like this, where the tables retain the selector and name, but only hold weakly onto the observer:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 | |

When the observer is deallocated, the corresponding references will be zeroed out in the NSMapTables, the entries will “disappear” automatically, and the name and selector objects will be deallocated. There is no need for the observer to explicitly remove itself from our FooNotificationCenter. It will happen automatically when the observer is deallocated… at least in theory.

Probing NSMapTable

I recently struggled with unexpected behaviors in NSMapTable and decided to take a closer look. I came to the unfortunate conclusion that NSMapTable has undocumented limitations in its handling of zeroing weak references (at least under ARC, though I expect similar results with manual memory management).

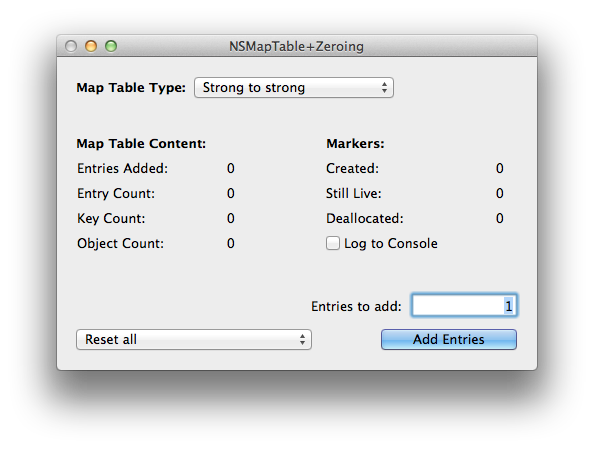

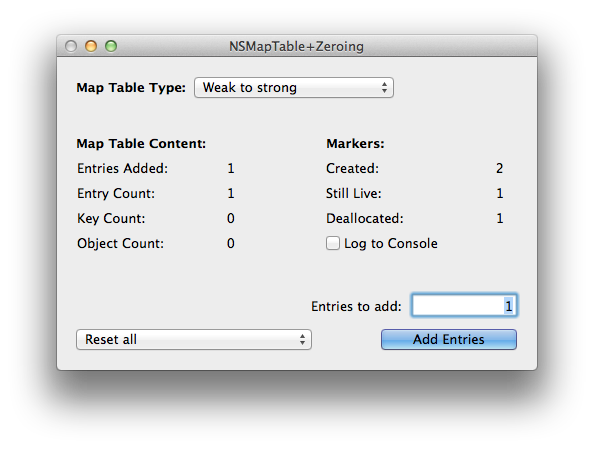

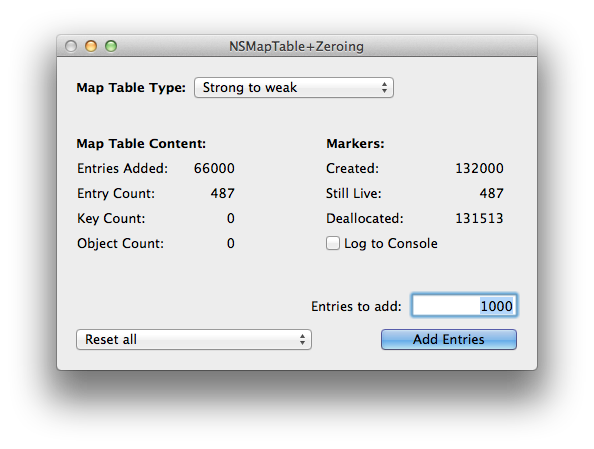

To see NSMapTable in action, I wrote a simple test application that exercises NSMapTable, published on github. The application manages an instance of NSMapTable. Here is what it looks like (if you run the app, use the tooltips to get more info on the interface elements):

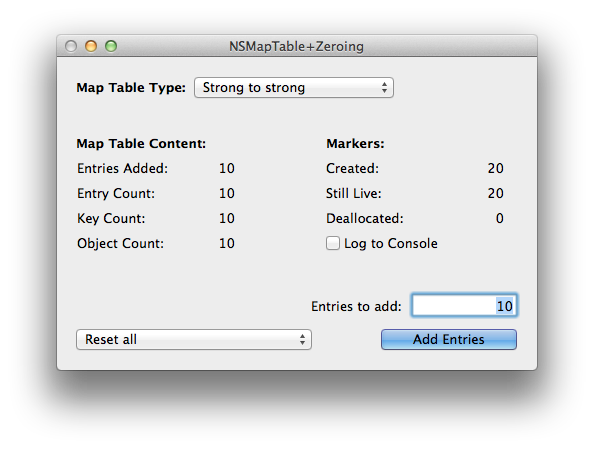

When clicking the ‘Add Entries’ button, the NSMapTable is fed the requested number of keys and objects, created freshly using the special PARMarker class. PARMarker is a simple NSObject subclass that keeps track of the calls to init and dealloc. Addition of the entries (one PARMarker for the key, and one for the object) is done within an autorelease pool, so that markers not retained by the NSMapTable instance are deallocated when the pool dies. The code is very simple:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Let’s try the app. We will first add 10 entries in a strong-to-strong NSMapTable. After addition, the NSMapTable has a value of 10 for its count property, and has 10 keys and 10 objects. There were 20 markers created (10 keys and 10 values). None of the markers are deallocated: they are all retained by the NSMapTable instance. So far, so good:

Weak to Strong

Now, let’s get in trouble. For this, we will use an instance of NSMapTable with weak-to-strong references, and add 1 entry. Because the key is autoreleased just after being added to the table, there should be no entry at all in our NSMapTable instance. Here is what we get instead:

Let’s start with the good news. The ‘Key count’ and ‘Object count’ of the map table are both at 0. These numbers are the values returned by keyEnumerator.allObjects.count and objectEnumerator.allObjects.count. This indicates that the added entry was removed from the NSMapTable when the key was autoreleased. The corresponding object has disappeared from NSMapTable as soon as the key was deallocated. Or has it really?

The first sign of trouble is the count property of NSMapTable, which returns 1. Somehow, the NSMapTable still considers that it has 1 entry. More troubling, the object corresponding to the entry that should be gone, is in fact still alive! Out of the 2 initial FDMarker instances, only one is deallocated (the key), and the other one is still alive (the object).This is very troublesome, as this essentially means the object can’t be reached anymore, and is “leaking”.

Technically, it’s not leaking, since it’s properly released when NSMapTable is deallocated. But if the NSMapTable is never used again, and just kept alive, the object will also remain alive forever (there is no garbage-collector-like activity here). A number of operations can trigger a “purge”, though, and the object will eventually be released if the NSMapTable is used. For instance, a call to removeAllObjects will trigger such a purge.

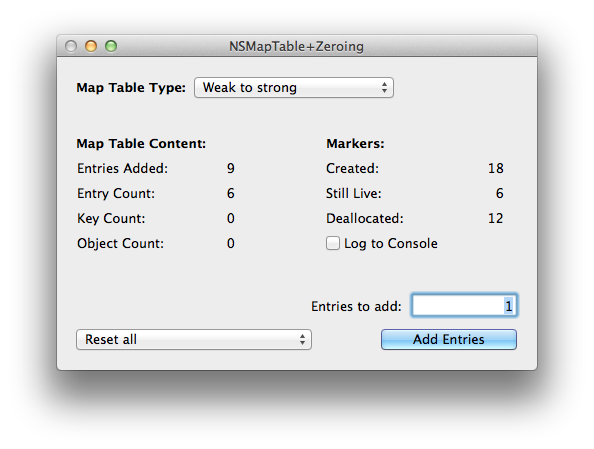

In addition, if we keep adding more entries, the object will eventually be released and deallocated. For instance, if we add 8 more entries, 1 by 1, the number of markers still alive is now 6, meaning that 3 out of 9 of the added objects were purged. But we still have 6 objects dangling:

This completely defeats the purpose of [NSMapTable weakToStrongObjectsMapTable]. After lots of Googling, and to my great surprise, I eventually realized this behavior is (sort of) documented, as part of the Moutain Lion Release Notes, in a way that directly contradicts the promise of NSMapTable official documentation:

However, weak-to-strong NSMapTables are not currently recommended, as the strong values for weak keys which get zero’d out do not get cleared away (and released) until/unless the map table resizes itself.

I wish this had been documented in the NSMapTable documentation (to report the issue like I did, please use the ‘Provide Feedback’ button in the Xcode documentation window, or use this link directly).

Strong to weak

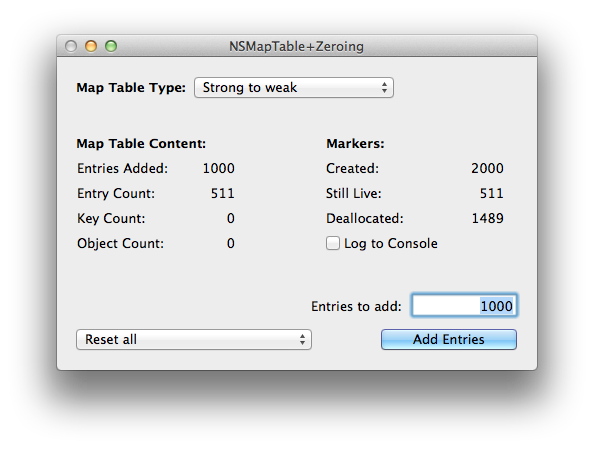

The NSMapTable documentation or the OS X release notes do not include any warning for strong-to-weak NSMapTables, but that does not mean we should not be looking for more troubles. Let’s use a strong-to-weak NSMapTable, and add 1000 entries. Again, as soon as the entries are added, our code exits an autorelease pool, but this time, the objects (not the keys) are deallocated and zeroed. This should result in the removal of all the NSMapTable entries, followed by deallocation of the keys. Let’s see:

Definitely more troubles. Out of the 1000 keys initially retained by NSMapTable, only 489 have been deallocated, and 511 are still alive.

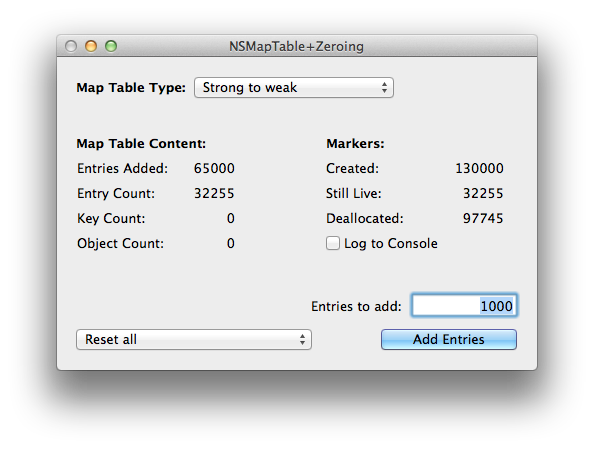

Let’s keep digging and add more entries by pressing the ‘Add Entries’ button another 64 times. After adding 65,000 key/value pairs in 1000 increments, we find that 32,255 objects are still alive and help onto by the map table. This means 32,255 out of the 65,000 objects are still alive, while all the keys are deallocated (and zero-ed out):

Heck, let’s add another 1000 entries. When getting to 132,000 key/value pairs, the number of “live” objects drops to 487. It looks like something happened internally (resizing?) and the NSMapTable purged most of the retained keys:

As pointed out by Michael Tsai, the expected behavior of strong-to-weak NSMapTable is not very well documented, and subject to interpretation. He expected that keys should be retained until explicitely removed with removeObjectForKey: (though in this case, I would expect the keyEnumerator to still include those keys). I would personally have expected a behavior symmetrical to the weak-to-strong NSMapTable, where zeroing of the object would remove the entry from the table and release the key. Based on what I am seeing, the latter is probably what NSMapTable is supposed to do. What happens in practice, though, is worse than any of those two possibilities: the key is retained for a while and released eventually.

One more tidbit of information: even though the non-released keys are not listed in the keyEnumerator, you can still call removeObjectForKey: with those, which will in fact purge the corresponding key. You can try this in the test app using the action “Remove Live Keys” in the popup menu at the bottom.

Conclusions

No matter how one interprets the NSMapTable documentation, it is in practice not as magic as one would like it to be. Both weak-to-strong and strong-to-weak NSMapTables have problems dealing with zeroing weak references. The behavior is dependent on the type of table, its current size, and its past activity. In both cases, the behavior is likely deterministic, but it depends on the internal implementation of NSMapTable. It is impossible to predict and should be considered undefined.

There are two obvious consequences to the leaking of entries with zeroing weak references in NSMapTable:

- Memory leak. This is only significant if the map table is used with large objects and/or a large number of objects, as the internal purging appears to be less frequent with larger sizes, and as the number of objects allowed to stay alive becomes larger. Even with small number of objects, unexpected retain cycles that weak references are supposed to break, may also form, and may remain stuck for a very long time if the map table is not used very much.

- Performance issue. If the strongly-referenced objects have any significant activity (e.g. respond to notifications, write to files, etc…), they will not only participate in the memory footprint but also remain active much longer than expected. For instance, they might be registered for notifications, for filesystem events, etc…, running code that is no longer needed (maybe even corrupting your application state in an unpredictable way).

The only real workaround is to have APIs for explicit removal of entries in the classes that use NSMapTables internally. The API clients of these classes should then add explicit calls in their dealloc method. For FooNotificationCenter, this would mean adding one more method, that would need to balance any use of addObserver:selector:name::

1 2 3 4 | |

This is a shame. Similar to how ARC has taken memory management out of the hands of developers, and reduced the number of associated bugs, the combination of NSMapTable and zeroing weak references should further reduce the code needed to clean up more complex object relationships, and to further reduce the memory consumption and resource usage of existing apps.

As Michal Tsai wonders, “NSMapTable is an important building block, so it’s a mystery why Apple hasn’t gotten it right yet”. I also think his interpretation is correct: without weak-deallocation notifications, the owners of zeroed references are unaware of the change. The zeroing of a reference is done by writing a bunch of 0s at the correct memory address(es), corresponding to the variable(s) that referenced the deallocated object. This process has to be as efficient as possible and be thread-safe. Any extraneous work to keep track of the object graphs or maintain a callback table, would need to happen upon deallocation of every weak reference, which could have a significant impact on performance. Is Apple ready to make that happen?

The implementation of NSMapTable, though, does not have to be dependent on the general implementation of zeroing weak references that Objective C has in place. Changing the Objective C runtime just to improve NSMapTable may be overkill. Instead, it seems a proper implementation of NSMapTable could be done using an alternative approach to zeroing weak references, like MAZeroingWeakRef. We are left waiting for Apple to fix NSMapTable, or implementing our own version (assuming it can even be done reliably).